Post by Prof. Stephen Horowitz, Professor of Legal English

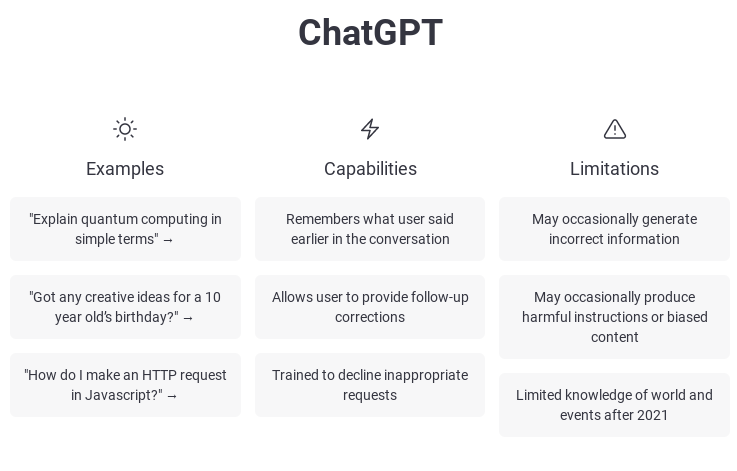

As we start to shift past the “wow” factor of AI and ChatGPT (see, e.g., this very cool post from the FCPA Blog posing questions to ChatGPT related to the Foreign Corrupt Practices Act, and also this academic article titled “GPT Takes the Bar Exam“), I’ve seen articles and social media posts and heard comments and commentary focused on the potential plagiaristic dangers of ChatGPT, the artificial intelligence-fueled chatbot that can produce complex, natural-sounding essays in a matter of seconds:

- “The End of High School English” by Daniel Herman in The Atlantic

- This recent episode of the Stay Tuned with Preet podcast titled “ChatGPT and the Future of AI (with Rebecca Heilweil).”

- This post from the Language Log blog about being able to identify essays created by Large Language Models like ChatGPT titled “Detecting LLM-created essays?” (And yes, it does appear that unhelpfully for those of us in the legal English world there is now a new and confusing meaning of LLM! Maybe some of us need to start being more intentional about including those periods in LL.M. 🙂

But my initial reaction was less of concern and more along the lines of, “What a great potential legal English tool! How can we use this to help our LLM students learn better?”

And this thinking feels connected to what I’ve read in articles like “AI and the Future of Undergraduate Writing” by Beth McMurtrie in The Chronicle of Higher Education which essentially says that the horse is out of the barn; how are we as teachers and educational institutions going to adapt our assessment methods and how can we use this as a teaching tool. (This is really the underlying point of “The End of High School English” as well.)

Some of my own tests of ChatGPT, by the way, have included:

1) To ask it to “write an essay comparing Marie Antoinette and Rachel Carson,” the idea being to see if it could find connections on two seemingly unrelated people. And it did this quite effectively, acknowledging the lack of connection but finding comparison and contrast in that they were women of different social status who had certain accomplishments. About as good as I could expect from any student given a similar question.

2) To give it the issue spotter question, the policy question and the multiple choice questions from the recent exam we gave to our 2-Year LLM Legal English students on the topic of tort law. Again I was impressed that it could understand the long fact pattern and spit out a reasonably competent essay on negligence and the relevant issues. It missed a few issues and lacked context to confine the discussion to the issues covered in our course, which I suppose would be a “tell” if I were looking for evidence of plagiarism. And it kept discussing an unrelated case of the same name which was referenced in the policy question, even when I re-submitted the question and put in the actual citation and mentioned the court and year. But it did surprisingly well on the multiple choice questions, one of which listed about eight different situations and asked the exam taker to identify which ones would meet the standard for the “open and obvious” defense.

3) To let my 9-year-old daughter play with it. She asked it a series of questions (some of which would not be appropriate to share here, particularly those that inquired on topics related to flatulence.) And then, I noticed, she followed along with rapt attention as the answers poured forth.

“This is a great form of extensive reading!” I thought. She’s reading things at a level that she can handle….on topics that interest her….and in a way that gives her total control over the process.

And then my mind quickly jumped to LLM students. This is a fantastic and easily accessible way to be able to interact with a “native English speaker” at a relatively sophisticated level and on topics that are of interest to the student.

Example 1: Reading the kind of writing that we expect students to be able to produce

One of my great laments in the teaching of legal writing to LLM students is that they don’t get to read sufficient quantities of the kind of writing we expect them to produce, i.e., that there’s a sort of asymmetry in the level of English they read versus the level they need to be able to write. They read plenty of court opinions, textbooks, and articles written by native English speaking writers whose work has been highly organized and edited by publishers and editors. While it would be great if LLM students could learn to write like that, that’s not generally the level of writing we expect from JD students–let alone LLM students–especially on anything written under time pressure.

Based on my teaching experience, the best way to develop a sense of what looks and sounds right, which is what we’re really asking of LLM students, is for them to have the opportunity to read ample amounts of the kind of writing we expect them to produce. Typically, LLM students might get to read a sample or two of a “best answer”from an exam or legal writing assignment. But while they may read hundreds of pages from textbooks and cases, they do not read anywhere near the equivalent amount of essays or other assignments written by average native English speaking JD students.

ChatGPT, which writes in a relatively natural, clear, plain-English style, is a potentially ideal source of such writing/reading for LLM students to get exposure to sufficient quantities of this style of comprehensible input which can in turn help them develop a stronger intuitive sense of parameters and voice in their own writing.

Additionally, if students are given active-noticing tasks to use with the texts, then the students can also develop a stronger sense of discourse patterns, appropriate cohesive devices, and grammar among other language and writing features that might not otherwise be absorbed via the “natural method.”

Example 2: Personalized Newsela

With ChatGPT, a student can create their own, personalized version of Newsela.com. Newsela.com is an extensive reading resource comprised of a vast library of authentic news articles, all written (and re-written) at five different levels of difficulty. The idea is that a student can select an article that interests them and then find the “just right” level that enables the student to read the article with relative ease.

Applying this concept to ChatGPT use, if an LLM student is reading a court opinion and finds part of the opinion difficult to understand, they could paste the relevant text into ChatGPT and ask it to summarize the text. And if that is still a bit too complicated, the student can request ChatGPT to “say it in a simpler way.” See below:

Me: Summarize this paragraph: “To be sure, Miller also cited Roper and Graham. 567 U. S., at 471–475. Roper barred capital punishment for offenders under 18. And Graham barred life without parole for offenders under 18 who committed non-homicide offenses. But Miller did not cite those cases to require a finding of permanent incorrigibility or to impose a categorical bar against life without parole for murderers under 18. We know that because Miller said so: “Our decision does not categorically bar a penalty for a class of offenders or type of crime—as, for example, we did in Roper or Graham.” 567 U. S., at 483. Instead, Miller cited Roper and Graham for a simple proposition: Youth matters in sentencing. And because youth matters, Miller held that a sentencer must have discretion to consider youth before imposing a life-without-parole sentence, just as a capital sentencer must have discretion to consider other mitigating factors before imposing a death sentence.”

ChatGPT: The case of Miller v. Alabama involved the issue of imposing life without parole sentences on murderers under the age of 18. The case cited the cases of Roper and Graham, which barred capital punishment for offenders under 18 and life without parole for offenders under 18 who committed non-homicide offenses, respectively. However, Miller did not use these cases to require a finding of permanent incorrigibility or impose a categorical ban on life without parole for murderers under 18. Instead, Miller held that the youth of the offender should be taken into consideration before imposing a life without parole sentence, just as other mitigating factors are considered before imposing a death sentence.

Me: Can you write that in an even simpler way?

ChatGPT: The case of Miller v. Alabama addressed the issue of sentencing murderers under the age of 18 to life without parole. The case cited previous cases that had banned certain types of harsh sentences for juveniles, but did not impose a blanket ban on life without parole for all juvenile murderers. Instead, the case stated that the youth of the offender should be considered as a factor in sentencing.

An approach like this could not only help an LLM student with comprehension with texts that use unfamiliar phrasal verbs, figures of speech, and cultural information. It also provides multiple opportunities to read the same content written in different ways. This is itself a form of extensive reading and is also great for helping students absorb the language and language forms needed for paraphrasing and summarizing, skills that are notoriously challenging to teach to LLM students.

Example 3: Instant Translation

This one is a bit of a non-issue I think, or at least not a new issue, because students already know about and make use of Google Translate. But it’s good to be aware that ChatGPT can translate into a wide range of languages because I had naively and America-centrically initially assumed it was just English. And by the way, if you’re wondering which is the better translator, I asked ChatGPT and received a surprisingly modest answer:

“Google Translate is generally considered to be more accurate and reliable than ChatGPT. Google Translate is a machine translation service developed by Google that can automatically translate text and websites from one language into another. It uses advanced machine learning algorithms and a large database of previously translated texts to produce translations that are generally of high quality. ChatGPT, on the other hand, is a chatbot that uses a variant of the GPT (Generative Pre-training Transformer) language model to generate responses to user input. While ChatGPT can produce coherent and engaging responses, it is not designed specifically for translation and may not always produce accurate translations. In general, it is recommended to use a specialized machine translation service such as Google Translate for translation tasks, rather than relying on a chatbot like ChatGPT.”

ChatGPT, Dec 19, 2022 around 10:30am EST (Side note: I suddenly feel like I need to include time of day in the citation since ChatGPT is always learning and may produce a different result in response to the same question at a later date. Am I overthinking it?)

For more on ChatGPT translation vs Google Translate, check out this Reddit discussion titled “Comparing ChatGPT with Google Translate” as well as this other Reddit discussion titled “Translating Chinese to English: ChatGPT vs Google Translate” which sets up a chart showing the original text of an article in Chinese from the Wall St. Journal next to a translation into English via ChatGPT and another translation into English by Google Translate.

But since we’re on the topic, two general categories occur to me as ways an LLM student might use ChatGPT as a beneficial tool:

1) Scaffolding, i.e., a way to take difficult to understand text and create a version in their L1 (i.e., their first language) to help them better understand and comprehend what they read in English. Having a better sense of what they’re reading in English will enable them to build more reading fluency when they read the English version.

2) Fixing language issues in their writing, i.e., An LLM student could write an email, an essay, an article, etc. and then run it through ChatGPT and ask it to “fix” any language or grammar issues. Yes, of course this can be misused and abused. But think of all the times a law student or lawyer may need to email someone in English and want to make sure they don’t embarrass themselves or distract from their intended meaning with language errors or word and grammar choices that don’t quite sound right to a native speaker’s ears. And think of how they don’t generally have someone right by them who is able and willing to check their email.

I’ve now tried this using text from emails that students have sent me in the past, and ChatGPT does a pretty nice job of cleaning things up–though definitely not perfect. Here’s one sample:

Student: “I feel really sad that I missed the gathering on Tuesday since I’m doing the internship that day, will you still have lunch/coffee in the cafeteria today? If so, I would like to join in!”

ChatGPT: “Thank you for the email. I’m sorry that I won’t be able to make it to the gathering on Tuesday because I have an internship that day. Will you still be having lunch or coffee in the cafeteria today? If so, I would love to join you.

The ChatGPT version definitely lacks the writing accent that would indicate to me that the writer is not a native speaker of English. But it missed the nuance that the writer already missed the gathering, instead treating the gathering as something that will happen in the near future.

Here are two other examples that do a better job of hitting the nail on the head, so to speak. (Note: These are individual sentences taken from a longer email. ChatGPT undoubtedly draws from the context of the full email.)

Student: “It was felt like you hit me some points or some sparks.”

ChatGPT: “It feels like you have hit the nail on the head with some of your comments and provided some valuable insights.”

Student: I realize what I really want is to enrich my career, insist on my purpose, systematacially improving my legal capacity and more.

ChatGPT: Through your guidance, I have realized that what I really want is to enrich my career, stay true to my purpose, and systematically improve my legal capacity.

Very nice! Of course, this isn’t a perfect solution as the writer may not be able to have full confidence in the language accuracy of ChatGPT’s output. But you can’t knock it as a solution to have in one’s back pocket.

Of course, if you apply this same strategy to a legal argument, the potential negative consequences of a grammar or word choice decision increase significantly as entire meanings, and in turn conclusions, can hinge on otherwise minute differences in language.

But to flip this around from a problem into a solution, a helpful assignment could involve asking students to not only write an assignment, but also ask them to then run it through ChatGPT and then try to compare and analyze it to their original to 1) try and identify any still existing errors, and 2) develop a better sense of what ChatGPT changed and why. And if you want to take it a step further, assign students in pairs to compare their analyses of their texts and then present to the class on what they noticed and learned from it.

What else?

These are just initial thoughts based on limited experience. What are some other ways that a tool like ChatGPT could be employed to help LLM students improve their legal English? Surely, many LLM students will figure out various strategies and tricks on their own. So maybe at some point we can figure out a way to engage them in the process. But as experienced instructors focused on our students’ learning, surely we have many ideas as well.

Feel free to share yours in the comments section below. (Or better yet, ask ChatGPT what it thinks and paste its comments instead!)

An additional idea I just thought of: Could have a task or assignment where students have to practice constructing or asking hypotheticals based on cases studied and then see what kind of responses are generated. Perhaps with an eye towards identifying a hypothetical that will lead to a certain response that falls within certain parameters.