The good news: Yes, it probably can!

The bad news: But it’s not the LLMs you’re probably thinking of.

I recently noticed in the abstract for the article “GPT Takes the Bar Exam” that the last line reads:

“While our ability to interpret these results is limited by nascent scientific understanding of LLMs and the proprietary nature of GPT, we believe that these results strongly suggest that an LLM will pass the MBE component of the Bar Exam in the near future.“

At first I did a double take and had to re-read the full abstract to understand how in the heck GPT’s relative success in answering bar exam questions could portend that one lucky future LLM student will pass the multiple choice section of the bar exam.

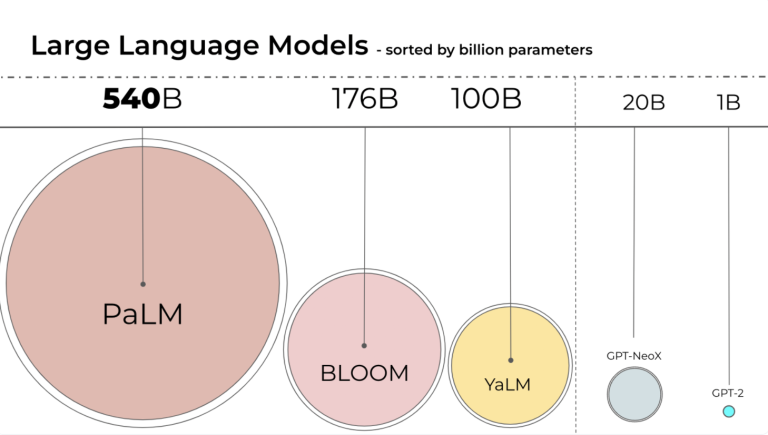

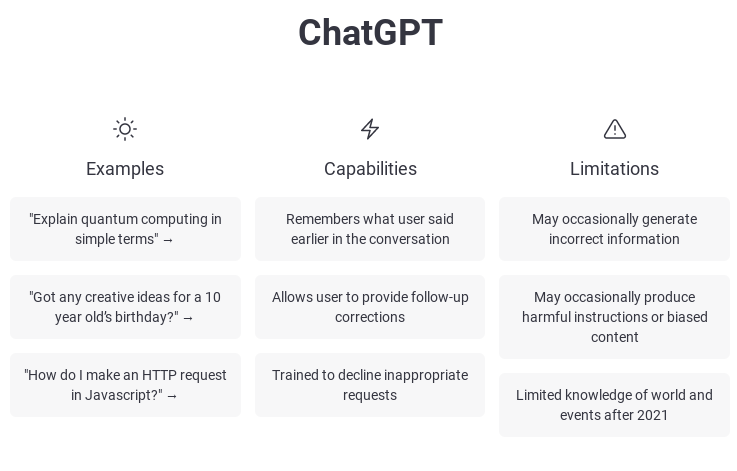

Then I remembered that LLM is different from LL.M. Because in the context of artificial intelligence, LLM means “Large Language Model” which is the term used to encapsulate what ChatGPT is and which is obviously very different than the Master in Laws or Legum Magister meaning which refers to a one-year degree at a law school and which is often associated with international students in US law schools.

This is clearly a distinction that those of us in the legal English field will have to get used to in order to avoid potential confusion in the future. It also suggests that the periods in “LL.M.” may need to come back in fashion for those out there (like me) who have been trying to get away with leaving them out in the name of efficiency.

Here’s the full abstract, in case of interest:

**********************************

GPT Takes the Bar Exam

13 Pages Posted: 31 Dec 2022

Michael James Bommarito

273 Ventures; Licensio, LLC; Bommarito Consulting, LLC; Michigan State College of Law; Stanford Center for Legal Informatics

Daniel Martin Katz

Illinois Tech – Chicago Kent College of Law; Bucerius Center for Legal Technology & Data Science; Stanford CodeX – The Center for Legal Informatics; 273 Ventures

Date Written: December 29, 2022

Abstract

Nearly all jurisdictions in the United States require a professional license exam, commonly referred to as “the Bar Exam,” as a precondition for law practice. To even sit for the exam, most jurisdictions require that an applicant completes at least seven years of post-secondary education, including three years at an accredited law school. In addition, most test-takers also undergo weeks to months of further, exam-specific preparation. Despite this significant investment of time and capital, approximately one in five test-takers still score under the rate required to pass the exam on their first try. In the face of a complex task that requires such depth of knowledge, what, then, should we expect of the state of the art in “AI?” In this research, we document our experimental evaluation of the performance of OpenAI’s text-davinci-003 model, often-referred to as GPT-3.5, on the multistate multiple choice (MBE) section of the exam. While we find no benefit in fine-tuning over GPT-3.5’s zero-shot performance at the scale of our training data, we do find that hyperparameter optimization and prompt engineering positively impacted GPT-3.5’s zero-shot performance. For best prompt and parameters, GPT-3.5 achieves a headline correct rate of 50.3% on a complete NCBE MBE practice exam, significantly in excess of the 25% baseline guessing rate, and performs at a passing rate for both Evidence and Torts. GPT-3.5’s ranking of responses is also highly correlated with correctness; its top two and top three choices are correct 71% and 88% of the time, respectively, indicating very strong non-entailment performance. While our ability to interpret these results is limited by nascent scientific understanding of LLMs and the proprietary nature of GPT, we believe that these results strongly suggest that an LLM will pass the MBE component of the Bar Exam in the near future.

Keywords: GPT, ChatGPT, Bar Exam, Legal Data, NLP, Legal NLP, Legal Analytics, natural language processing, natural language understanding, evaluation, machine learning, artificial intelligence, artificial intelligence and law

JEL Classification: C45, C55, K49, O33, O30

Suggested Citation:

Bommarito, Michael James and Katz, Daniel Martin, GPT Takes the Bar Exam (December 29, 2022). Available at SSRN: https://ssrn.com/abstract=4314839 or http://dx.doi.org/10.2139/ssrn.4314839