Post by Stephen Horowitz, Professor of Legal English

On the Academic Support Professionals listserv the other day, a great question popped up:

“Can anyone recommend resources for international JD students looking to improve their baseline knowledge of the US legal/political systems and/or US history? A faculty member teaching [course name] is looking for recommendations for a student who attended high school and college outside of the US. The faculty member believes this student would benefit from resources that explain the US government at a more basic level than what is covered in the course. Thanks in advance for any resources or leads!”

I really appreciated this question for a few reasons:

- (1) International JD students (i.e., students who didn’t grow up in the US education system) are a growing segment of the law school community, yet they generally don’t get the same level of legal English support that international LLM students may receive. Plus their needs are often different from both regular JD students and international LLM students.

- (2) Background and cultural knowledge is such a significant component of comprehension in US law school, yet it’s difficult to acquire if you didn’t grow up with it. And if you did grow up in the US, it’s hard to notice or be aware of the challenges of functioning effectively in US law school without it (or with less of it.)

I’ve been keeping my eyes open for years for resources that can help international LLM students with this, and that’s part of the reason I created the Legal English Resources page on this blog.

But until I saw the question above, I’d never organized my thoughts specifically with international JD students in mind. Yet the answers poured forth quickly and enthusiastically in my email response to the listserv. And so I figured this information might be helpful for others as well.

One of the key qualities of these resources, by the way, is that they generally don’t require much extra work on the part of the professor or student advisor. You can pretty much hand any of these off to students and let them run with it. Or, if they require a little preparation, once you’ve done it once, you don’t have to think about it again after that.

Resources to help International JD students learn important background information about US history, the US political system, and the US legal system.

1. Civics101 Podcast (produced by New Hampshire public radio) – lots of short episodes on a wide range of topics. In their own words, “What’s the difference between the House and the Senate? How do landmark Supreme Court decisions affect our lives? What does the 2nd Amendment really say? Civics 101 is the podcast about how our democracy works…or is supposed to work, anyway.”

2. Street Law: A Course in Practical Law textbook – used primarily for high school students, but great for international students too. Plus a glossary in the back! I’ve used parts of the book with LLM students in the past and also pointed a colleague to it who used several chapters to develop an entire legal English criminal law course for international LLM students.

3. iCivics – an online ed company that creates materials to teach civics to US students. I haven’t had occasion to use any of their materials yet, but an intriguing option worth checking out. Here’s a description of who they are in their own words: “iCivics champions equitable, non-partisan civic education so that the practice of democracy is learned by each new generation. We work to inspire life-long civic engagement by providing high quality and engaging civics resources to teachers and students across our nation.”

4. Newsela.com – It’s a huge extensive reading library of real news and other articles written at 5 different levels of difficulty (or ease.) And it’s accessible for free with registration. While most of it is news articles, there’s also a whole section on civics/US history and a number of articles that might be helpful. For example, I remember they have the Constitution, Declaration of Independence, the Federalist Papers, Brown v Board & Plessy v Ferguson all written and re-written at 5 different levels of difficulty. Also profiles of famous Americans including some presidents, Supreme Court justices, civil rights leaders, etc. But you have to sift through to find some of this stuff. Also, they may have put a paywall up on some of the materials other than the news articles since I last used it.

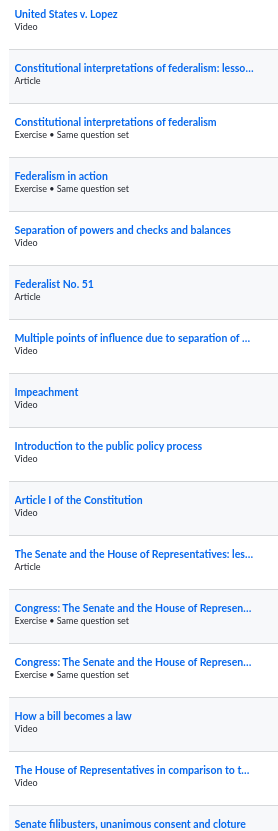

5. Khan Academy has a slew of video lessons on history and civics. The key is narrowing it down. During the pandemic, I created a Khan Academy “course” for Georgetown LLM students to use by just adding the units and lessons that seemed relevant and told students to register and use it if they want to learn more beyond my actual class with them. In total, I found about 35 different lessons/items that felt relevant and appropriate to include in my “class.” There’s a screenshot below to give you a sense of some of the topics. But feel free to contact me directly if you want to know which ones they are so you can create your own class. Happy to share.

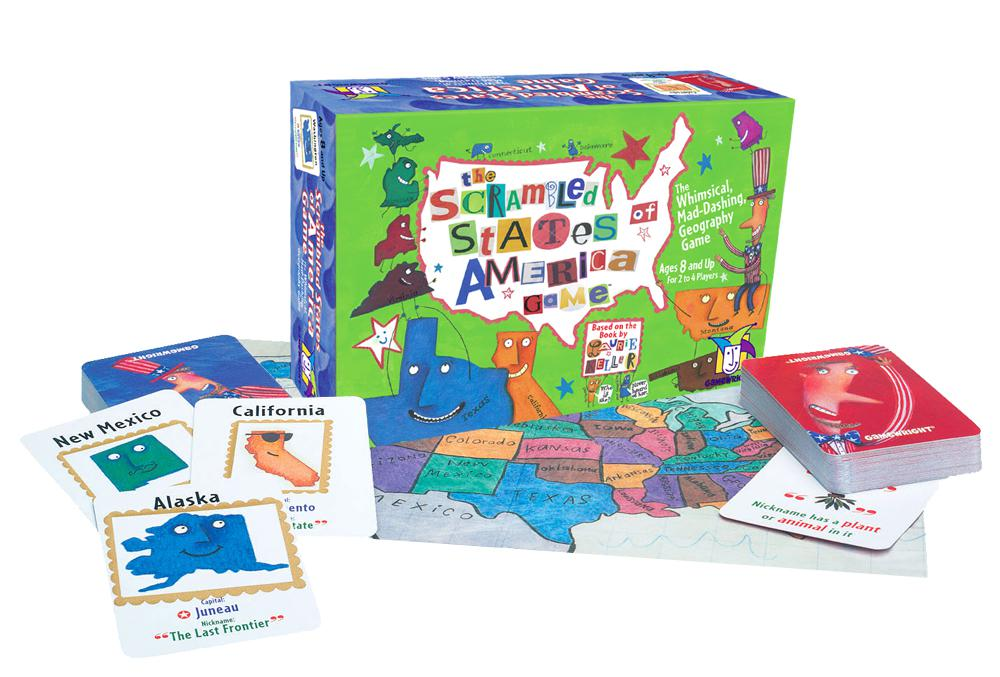

6. The Scrambled States of America (the game)

This game is based on a clever children’s book of the same name. My kids (5, 7 and 11 at the time) got into it during the pandemic, and in addition to being super fun and super easy, within a few weeks they had all absorbed every state, state capital, and state nickname in addition to having a sense of where the states are located. I’ve learned over the years that my international students often have little sense of US geography outside of New York and Los Angeles. US geography is important background knowledge to have in US law school as it often provides vital context. Yet US geography is rarely ever taught to international law students. And when it is, it’s hard to do as effectively as this game does. Let international JD students spend a couple hours playing this and they’ll be all set with their geography. And you’ll have a great time if you play with them!

7. Legal English Resources page on the Georgetown Legal English Blog: In addition to all the items listed above, there are many more on the Legal English Resources page. So I encourage you to take a look. Maybe you’ll find something else there that fits the needs of your students. (Or maybe you’ll have a suggestion for a helpful resource that I didn’t know about!)